Excellent news when the it became possible to backup to Windows Azure your Windows 8 Client machines and not just Servers.

http://azure.microsoft.com/blog/2014/12/16/azure-backup-announcing-support-for-windows-client-operating-system/

I downloaded the software on my laptop and ran a backup from the GUI, it worked just the same as a server - excellent. Then I thought I would do some automation from PowerShell cmdlets.

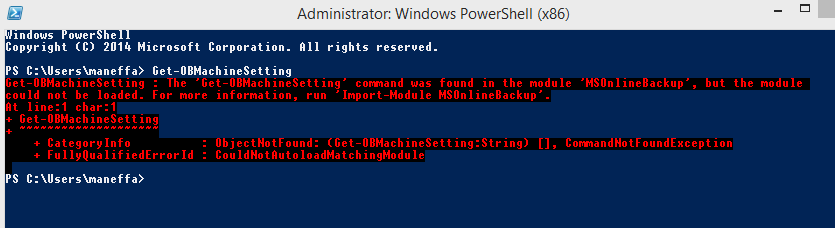

They didn't work...Here is an example.

Get-OBMachineSetting

Module could not be loaded error message. This was interesting. After poking around for a while I found nothing obviously amiss.

I changed tack slightly and tried the same thing in Sapien PowerShell Studio. That worked fine.

It suddenly dawned on me - I had pinned the 32-bit PowerShell version to the taskbar instead of the 64-bit, and these cmdlets are 64-bit only! The default PowerShell version on a 64-bit computer is 64-bit so I had 'cleverly' managed to overwrite this in customising my taskbar! Of course you may come across some cmdlets which are 32-bit only so it is something to bear in mind. Looking at the PowerShell Window title it was obvious after the event as it has (x86) in the title, but I had personally never seen any difference on cmdlets I had used between 32 and 64-bit.

Reminds me of the subtle differences between 32 and 64-bit versions of ODBC, which first was a significant problem when 64-bit versions of server came out in Server 2008, and you had to use %windir%\syswow64\odbcad32.exe instead of just typing ODBC if you wanted to use the 32 bit driver.

Thankfully if you type ODBC now MS have helpfully documented both versions.

IT Blog

IT in the medium size organisation

Monday 2 March 2015

Sunday 12 October 2014

Sun setting on excellent Microsoft Small Business Server 2003...

The best software is always the software you never think about, because it just works, day after day, month after month, year after year. One of the best pieces of software to come out of Microsoft was SBS2003. Recent cloud offerings has made the cloud a better solution for some small business type users - particularly not hosting email on premises for a small number of users, especially as Exchange 2003 is very disk IO hungry compared to latter versions, and relatively difficult to restore if it goes wrong, along with attendant downtime.The cloud email version is much easier to deploy across multiple devices once out of the office and there are no (relatively expensive) certificates to buy or manage.

But file, print and domain resources are still handled very well by SBS2003. The cloud generally does not handle large file sync well on the most popular cloud storage providers (Dropbox excepted, which only syncs the changes, an excellent feature, and has local LAN sync) and uplink bandwidth is usually a major problem unless you are using eg VDSL like BT Infinity. If you can script a decent backup on SBS2003 using ntbackup.exe to say a swappable local USB disk that gets taken off site you will be home and dry. Chances are you will never access it anyway, especially if you were enlightened enough to make sure you had a decent backup in the first place - those that make the most backups that are viable need them the least I have always found. A quick scheduled robocopy /mir of the files on the server to another workstation on the domain of the working files (which change very little) to go with the .bkf backup file and you have a very simple and robust setup that Small Business owners can understand for minimal cost and access files in an emergency without any 3rd party assistance.

Once the Small Business (or more likely a third party) has configured SBS2003 to your liking it just works, and works and works.You can look at the cloud at your leisure, or maybe not as SBS2003 support ends next summer (2015). It will indeed be a sad day as SBS2003 servers (almost always on real hardware in that era purchased from Dell etc) are shut down to make way for a 'better' solution. Performing a migration to a newer 64 bit windows platform is relatively costly and complex, and provides few benefits that I can see, except being on a supported platform. Having just installed many 2012 R2 servers during a hardware refresh I just can't see enough advantages to warrant the change for many small businesses as the cost is relatively large, especially in 3rd party time.

I can't help but think that some small businesses are going to pay more overall for their IT solution than before and be left with a worse solution. I might look at other solutions before going totally cloud depending on the circumstances. One solution is to take say a mirrored disk NAS (QNAPs run very well) and use that with file access from the cloud where required. Only you will know the best solution. For SBS2003 that are never accessed from the internet I would be reasonably happy to run them without any more patches, although I can almost guarantee that there will be issues with new Clients (eg Windows 10) running 100% with them, which will force their obsolescence.

Maybe it's time to look at that SBS2003 sitting in the corner and get some of the caked on dust off it, clean it up and think how much service it has given in the last few years for such little maintenance. You won't be seeing it much longer...

But file, print and domain resources are still handled very well by SBS2003. The cloud generally does not handle large file sync well on the most popular cloud storage providers (Dropbox excepted, which only syncs the changes, an excellent feature, and has local LAN sync) and uplink bandwidth is usually a major problem unless you are using eg VDSL like BT Infinity. If you can script a decent backup on SBS2003 using ntbackup.exe to say a swappable local USB disk that gets taken off site you will be home and dry. Chances are you will never access it anyway, especially if you were enlightened enough to make sure you had a decent backup in the first place - those that make the most backups that are viable need them the least I have always found. A quick scheduled robocopy /mir of the files on the server to another workstation on the domain of the working files (which change very little) to go with the .bkf backup file and you have a very simple and robust setup that Small Business owners can understand for minimal cost and access files in an emergency without any 3rd party assistance.

Once the Small Business (or more likely a third party) has configured SBS2003 to your liking it just works, and works and works.You can look at the cloud at your leisure, or maybe not as SBS2003 support ends next summer (2015). It will indeed be a sad day as SBS2003 servers (almost always on real hardware in that era purchased from Dell etc) are shut down to make way for a 'better' solution. Performing a migration to a newer 64 bit windows platform is relatively costly and complex, and provides few benefits that I can see, except being on a supported platform. Having just installed many 2012 R2 servers during a hardware refresh I just can't see enough advantages to warrant the change for many small businesses as the cost is relatively large, especially in 3rd party time.

I can't help but think that some small businesses are going to pay more overall for their IT solution than before and be left with a worse solution. I might look at other solutions before going totally cloud depending on the circumstances. One solution is to take say a mirrored disk NAS (QNAPs run very well) and use that with file access from the cloud where required. Only you will know the best solution. For SBS2003 that are never accessed from the internet I would be reasonably happy to run them without any more patches, although I can almost guarantee that there will be issues with new Clients (eg Windows 10) running 100% with them, which will force their obsolescence.

Maybe it's time to look at that SBS2003 sitting in the corner and get some of the caked on dust off it, clean it up and think how much service it has given in the last few years for such little maintenance. You won't be seeing it much longer...

Wednesday 24 September 2014

Exchange 2013 new-mailboxexport request with content filter of ALL limits results to 250?

I was looking through a large journal mailbox searching with keywords to find some mail items from a couple of years ago using keywords that I knew would bring back a reasonably large number of search results. The OWA 2013 search screen just went blank as I scrolled down it. This was repeatable. I had never seen this before but the mailbox/inbox folder in question has 6 million journalled emails in it so I can understand that this is well outside the design and probably tested limits.

This journal mailbox lives on its own VM (ie 1 physical server = 1 VM = 1 mailbox database = 1 mailbox, an unusual combination I am sure!) on a powerful but now out of warranty server and uses Hyper-V replica to keep a hot backup of the machine, which is not critical. It would be beneficial to split it into say one mailbox per year but I have not got round to investigating that yet.

When the OWA search failed to retrieve enough records I that I knew existed I decided to use PowerShell instead using the ContentFilter search property ALL, which Microsoft describes as

http://technet.microsoft.com/en-us/library/ff601762(v=exchg.150).aspx

Searching all indexable properties:

new-mailboxexportrequest -mailbox journalmailbox -filepath "\\unc\share\filename.pst" -ContentFilter {(all -like '*keyword1*') -and (all -like '*keyword2*') -and (Sent -gt '01/01/2010') }

This job finished in a few seconds. I looked in the pst file and it contained 250 records. I changed the search criteria with different dates and keywords. There were always exactly 250 results. Looks like 250 is the hardcoded results limit when using the ALL search.

I worked around the problem by splitting my query and adding a date filter to pull back only 1 month at a time, which gave me 12 pst files for a year. This was much more work but it was not worth scripting as a one off job. Then I tried using the same keywords to search only the subject the subject

Searching the subject only:

new-mailboxexportrequest -mailbox journalmailbox -filepath "\\unc\share\filename.pst" -ContentFilter {(subject -like '*keyword1*') -and (subject -like '*keyword2*') -and (Sent -gt '01/01/2010') }

This search brought back all the records meeting the criteria but took much longer to run. It did not bring back all the records I was looking for as it does not look 'everywhere'' in the message.

I can understand why the default behaviour of ALL is to limit the results as using the index means a lookup in the main email database for each item found in the search index - in SQL Server this kind of behaviour is called a bookmark lookup (it is not exactly the same as SQL Server but comparable), where a search in an index points to where the actual data resides, and is a very expensive operation, frequently involving expensive random disk lookups. Imagine if someone searched for something like the word THE then that would be in almost all emails, it would create a real performance hit.

The documentation needs to have added that (presumably for performance reasons) only 250 records will be returned, or a switch added that allows the user to specify the maximum number of records number of records to be returned that acknowledges that performance will be hit. Or am I missing something?

This journal mailbox lives on its own VM (ie 1 physical server = 1 VM = 1 mailbox database = 1 mailbox, an unusual combination I am sure!) on a powerful but now out of warranty server and uses Hyper-V replica to keep a hot backup of the machine, which is not critical. It would be beneficial to split it into say one mailbox per year but I have not got round to investigating that yet.

When the OWA search failed to retrieve enough records I that I knew existed I decided to use PowerShell instead using the ContentFilter search property ALL, which Microsoft describes as

| This property returns all messages that have a particular string in any of the indexed properties. For example, use this property if you want to export all messages that have "Ayla" as the recipient, the sender, or have the name mentioned in the message body. |

Searching all indexable properties:

new-mailboxexportrequest -mailbox journalmailbox -filepath "\\unc\share\filename.pst" -ContentFilter {(all -like '*keyword1*') -and (all -like '*keyword2*') -and (Sent -gt '01/01/2010') }

This job finished in a few seconds. I looked in the pst file and it contained 250 records. I changed the search criteria with different dates and keywords. There were always exactly 250 results. Looks like 250 is the hardcoded results limit when using the ALL search.

I worked around the problem by splitting my query and adding a date filter to pull back only 1 month at a time, which gave me 12 pst files for a year. This was much more work but it was not worth scripting as a one off job. Then I tried using the same keywords to search only the subject the subject

Searching the subject only:

new-mailboxexportrequest -mailbox journalmailbox -filepath "\\unc\share\filename.pst" -ContentFilter {(subject -like '*keyword1*') -and (subject -like '*keyword2*') -and (Sent -gt '01/01/2010') }

This search brought back all the records meeting the criteria but took much longer to run. It did not bring back all the records I was looking for as it does not look 'everywhere'' in the message.

I can understand why the default behaviour of ALL is to limit the results as using the index means a lookup in the main email database for each item found in the search index - in SQL Server this kind of behaviour is called a bookmark lookup (it is not exactly the same as SQL Server but comparable), where a search in an index points to where the actual data resides, and is a very expensive operation, frequently involving expensive random disk lookups. Imagine if someone searched for something like the word THE then that would be in almost all emails, it would create a real performance hit.

The documentation needs to have added that (presumably for performance reasons) only 250 records will be returned, or a switch added that allows the user to specify the maximum number of records number of records to be returned that acknowledges that performance will be hit. Or am I missing something?

Saturday 12 July 2014

Exchange 2010 (journal) large mailbox move limit reached?

Journal mailboxes seem to have little said about them. I wonder how many people are using them. It would be good to store 50gb chunks of journal mailboxes on Office 365, but they are expressly not allowed. Now that would be a cheap solution!

The journal mailbox is on Exchange 2010 (SP3 RU6 at time of writing). It is pretty large - 440gb, 5.5 million messages all in one folder(!). I decided to move it to another 2010 database as part of an Exchange 2010 to 2013 migration. It crashed half way through with a complaint about too many bad items, so I ran it from PowerShell with a very high bad item count using the AcceptLargeDataLoss switch. (I note that the Exchange 2013 interface accepts large item loss and notes it in the log, which is better than forcing PowerShell usage). The move failed again about half way through after a day or so of running with a bad item count error again. I suspected this was a bogus error and I had reached the limit of what Exchange 2010 could move to another Exchange 2010 server in 1 mailbox. I worked round the problem by creating a DAG of the mailbox database in question to the same server, which was much quicker anyway as it copies the blocks of the database as opposed the messages individually. I could also have unmounted the database and copied it, then used database portability to it's new location, but preferred for it to be online/mounted all the time. The journal mailbox had to subsequently be moved to Exchange 2013 from 2010 and it was the moment of truth. Moment is not quite the word as it took several days to move it, but it moved without an error. It seems that Exchange 2013 has increased the limits for extreme mailbox moves. As Office 365 supports 50gb mailboxes I was hoping that it would work OK up to say 10 times that size, and that was the case. I need to think how to come up with a better journal mailbox partition mechanism for the longer term. Maybe one mailbox per year or something like that.

Until Microsoft come up with a solution that supports a journal mailbox system on Office 365 many people won't/can't move all their email infrastructure to the cloud.

The journal mailbox is on Exchange 2010 (SP3 RU6 at time of writing). It is pretty large - 440gb, 5.5 million messages all in one folder(!). I decided to move it to another 2010 database as part of an Exchange 2010 to 2013 migration. It crashed half way through with a complaint about too many bad items, so I ran it from PowerShell with a very high bad item count using the AcceptLargeDataLoss switch. (I note that the Exchange 2013 interface accepts large item loss and notes it in the log, which is better than forcing PowerShell usage). The move failed again about half way through after a day or so of running with a bad item count error again. I suspected this was a bogus error and I had reached the limit of what Exchange 2010 could move to another Exchange 2010 server in 1 mailbox. I worked round the problem by creating a DAG of the mailbox database in question to the same server, which was much quicker anyway as it copies the blocks of the database as opposed the messages individually. I could also have unmounted the database and copied it, then used database portability to it's new location, but preferred for it to be online/mounted all the time. The journal mailbox had to subsequently be moved to Exchange 2013 from 2010 and it was the moment of truth. Moment is not quite the word as it took several days to move it, but it moved without an error. It seems that Exchange 2013 has increased the limits for extreme mailbox moves. As Office 365 supports 50gb mailboxes I was hoping that it would work OK up to say 10 times that size, and that was the case. I need to think how to come up with a better journal mailbox partition mechanism for the longer term. Maybe one mailbox per year or something like that.

Until Microsoft come up with a solution that supports a journal mailbox system on Office 365 many people won't/can't move all their email infrastructure to the cloud.

Exchange 2013 powershell broken after server 'rename' due to certificate problem

Everyone knows you cannot rename an Exchange Server once you have installed Exchange on it.

In this example the Server needed to be renamed. Exchange 2013 was un-installed, the server removed from the domain, renamed then added back to the domain.

Exchange 2013 was installed again. I noticed that some Exchange directories under program files\exchange were not removed by the un-installation, but decided that MS knew what they were doing. There were several GB worth of directories. I pondered on what else was left behind...

Installation gave no errors, and the server was put as the member of a DAG and came up with some errors when the DAG was set up. Interesting... firing up PowerShell on the server in question gave a cryptic error and little else.

Runspace Id: 22b854a9-cbd4-4567-97b6-f3aa52c12249 Pipeline Id: 00000000-0000-0000-0000-000000000000. WSMan reported an error with error code: -2144108477.

Error message: Connecting to remote server ex2.mydomain.local failed with the following error message : [ClientAccessServer=EX,BackEndServer=ex2.mydomain.local,RequestId=a34012f8-4b26-4ac7-9cb4-b57657fb9adf,TimeStamp=03/07/2014 16:31:29] [FailureCategory=Cafe-SendFailure] For more information, see the about_Remote_Troubleshooting Help topic.

Deleting and recreating the PowerShell virtual directory made no difference.

http://technet.microsoft.com/en-us/library/dd335085(v=exchg.150).aspx

Then my colleague noted a strange event in the event logs:

An error occurred while using SSL configuration for endpoint 0.0.0.0:444. The error status code is contained within the returned data.

This was more like it. HTTPS was pointing to a non-existent certificate - probably the original self signed certificate from the early installation, that was deleted during 'manual' tidying up. The new self signed certificate was bound and everything started working again.

I think next time an Exchange server needs 'renaming' I will un-install Exchange, then reinstall Windows from scratch... I doubt that much time is spent investigating problems like the above by the Exchange development team.

In this example the Server needed to be renamed. Exchange 2013 was un-installed, the server removed from the domain, renamed then added back to the domain.

Exchange 2013 was installed again. I noticed that some Exchange directories under program files\exchange were not removed by the un-installation, but decided that MS knew what they were doing. There were several GB worth of directories. I pondered on what else was left behind...

Installation gave no errors, and the server was put as the member of a DAG and came up with some errors when the DAG was set up. Interesting... firing up PowerShell on the server in question gave a cryptic error and little else.

Runspace Id: 22b854a9-cbd4-4567-97b6-f3aa52c12249 Pipeline Id: 00000000-0000-0000-0000-000000000000. WSMan reported an error with error code: -2144108477.

Error message: Connecting to remote server ex2.mydomain.local failed with the following error message : [ClientAccessServer=EX,BackEndServer=ex2.mydomain.local,RequestId=a34012f8-4b26-4ac7-9cb4-b57657fb9adf,TimeStamp=03/07/2014 16:31:29] [FailureCategory=Cafe-SendFailure] For more information, see the about_Remote_Troubleshooting Help topic.

Deleting and recreating the PowerShell virtual directory made no difference.

http://technet.microsoft.com/en-us/library/dd335085(v=exchg.150).aspx

Then my colleague noted a strange event in the event logs:

An error occurred while using SSL configuration for endpoint 0.0.0.0:444. The error status code is contained within the returned data.

This was more like it. HTTPS was pointing to a non-existent certificate - probably the original self signed certificate from the early installation, that was deleted during 'manual' tidying up. The new self signed certificate was bound and everything started working again.

I think next time an Exchange server needs 'renaming' I will un-install Exchange, then reinstall Windows from scratch... I doubt that much time is spent investigating problems like the above by the Exchange development team.

Exchange 2010 to 2013 OWA delays after migration needs IIS application pool recycling

Migrating batches of OWA users from Exchange 2010 SP3 RU6 to 2013 CU5

When the http web page shortcuts were updated to point to CAS2013 (eg https://cas2013/owa) servers instead of CAS2010 servers just prior to the mailbox moves these users were unable to open their deleted items folder but this was a minor error so it was ignored as the users were only in this state for a short time. Presumably there is a subtle bug when the CAS2013 was proxying to the CAS2010. Everything else worked perfectly. During testing no-one had been in the deleted items folder!

After moving mailboxes to Exchange 2013 a small number of users were getting a major error where it appeared that the CAS2013 was still attempting to proxy back to CAS2010, and the CAS2010 attempting to retrieve data from Mailbox2013! Obviously OWA 2010 does not know how to get data from a 2013 mailbox and fails.

The error always had the same pattern.

Remember that the original request is to https://CAS2013/OWA, leaving the CAS2013 to decide how to handle the request.

When the http web page shortcuts were updated to point to CAS2013 (eg https://cas2013/owa) servers instead of CAS2010 servers just prior to the mailbox moves these users were unable to open their deleted items folder but this was a minor error so it was ignored as the users were only in this state for a short time. Presumably there is a subtle bug when the CAS2013 was proxying to the CAS2010. Everything else worked perfectly. During testing no-one had been in the deleted items folder!

After moving mailboxes to Exchange 2013 a small number of users were getting a major error where it appeared that the CAS2013 was still attempting to proxy back to CAS2010, and the CAS2010 attempting to retrieve data from Mailbox2013! Obviously OWA 2010 does not know how to get data from a 2013 mailbox and fails.

The error always had the same pattern.

Remember that the original request is to https://CAS2013/OWA, leaving the CAS2013 to decide how to handle the request.

Request

Url: https://CAS2010.domain.local:443/owa/forms/premium/StartPage.aspx (proxied in error to 2010)

User: AUser

EX Address: /o=domain/ou=domain/cn=Recipients/cn=AUser

SMTP Address: AUser@domain.com

OWA version: 14.3.195.1

Mailbox server: MAILBOX2013.domain.local

Exception

Exception type:

Microsoft.Exchange.Data.Storage.StoragePermanentException

Exception message: Cannot get row count.

Call stack

Microsoft.Exchange.Data.Storage.QueryResult.get_EstimatedRowCount() ...

Rest omitted for brevity

After looking round the problem appeared similar to this one with the EAS directory/mobile devices.

However, all the EAS devices picked up the new settings within 10 minutes of the mailbox move...

I manually recycled the application pools and the mailboxes magically started working in OWA.

It looks like a different variant of the same bug. Lets hope they fix it soon...

Friday 4 July 2014

Outlook authentication problem after upgrading from Exchange 2010 SP2 to SP3 plus roll up

Upgrading an Exchange 2010 SP2 server to Exchange 2010 SP3 + RU6 in readiness for Exchange 2013 migration.

The service pack was applied, and then the roll up. No problems.

The next day some Outlook users were complaining of an authentication prompt 'randomly' asking for username and password when they were doing nothing in Outlook. After much investigation we tracked it down to the offline address book. This explained why the authentication prompt was only appearing infrequently, when Outlook went to download the address book in the background. After double checking the OAB physical directory / virtual directory permissions etc and oab.xml could be opened as you would expect there was no obvious cause to this error. Terminal server users did not have this problem as they were using non cached mode without the offline address book. Changing a cached Outlook user to direct would also sort the problem, by bypassing the offline address book in the same manner. My colleague suggested we reboot the server, but this was more in desperation than anything else. The server had not been rebooted since the application of the SP3/RU6.

The server was rebooted and... magically the prompt went away. How bizarre is that? When you first install Exchange the setup programme tells you to reboot before putting the server into production, but not the service pack. The moral of the story is to always reboot after applying a service pack. I have no idea what could have happened but was more than happy when the reboot sorted it, as the next avenue was to open an expensive support ticket with MS support.

The service pack was applied, and then the roll up. No problems.

The next day some Outlook users were complaining of an authentication prompt 'randomly' asking for username and password when they were doing nothing in Outlook. After much investigation we tracked it down to the offline address book. This explained why the authentication prompt was only appearing infrequently, when Outlook went to download the address book in the background. After double checking the OAB physical directory / virtual directory permissions etc and oab.xml could be opened as you would expect there was no obvious cause to this error. Terminal server users did not have this problem as they were using non cached mode without the offline address book. Changing a cached Outlook user to direct would also sort the problem, by bypassing the offline address book in the same manner. My colleague suggested we reboot the server, but this was more in desperation than anything else. The server had not been rebooted since the application of the SP3/RU6.

The server was rebooted and... magically the prompt went away. How bizarre is that? When you first install Exchange the setup programme tells you to reboot before putting the server into production, but not the service pack. The moral of the story is to always reboot after applying a service pack. I have no idea what could have happened but was more than happy when the reboot sorted it, as the next avenue was to open an expensive support ticket with MS support.

Subscribe to:

Posts (Atom)